Overview

This connector can be used as a source and as a destination

- As a source, you can import one or several dataset tables

- As a destination, you can sync any dataset table in BigQuery

Setup

Pre-requisites

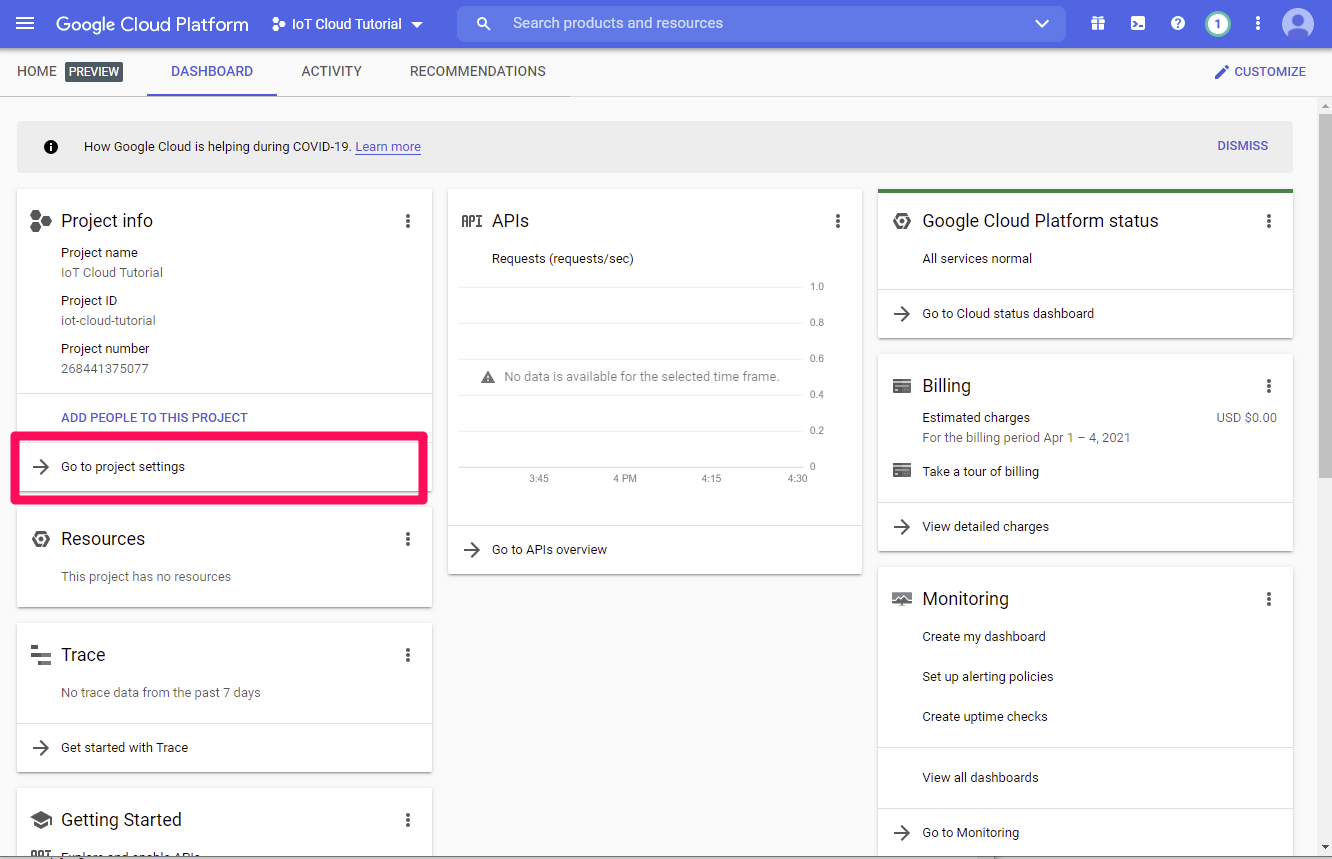

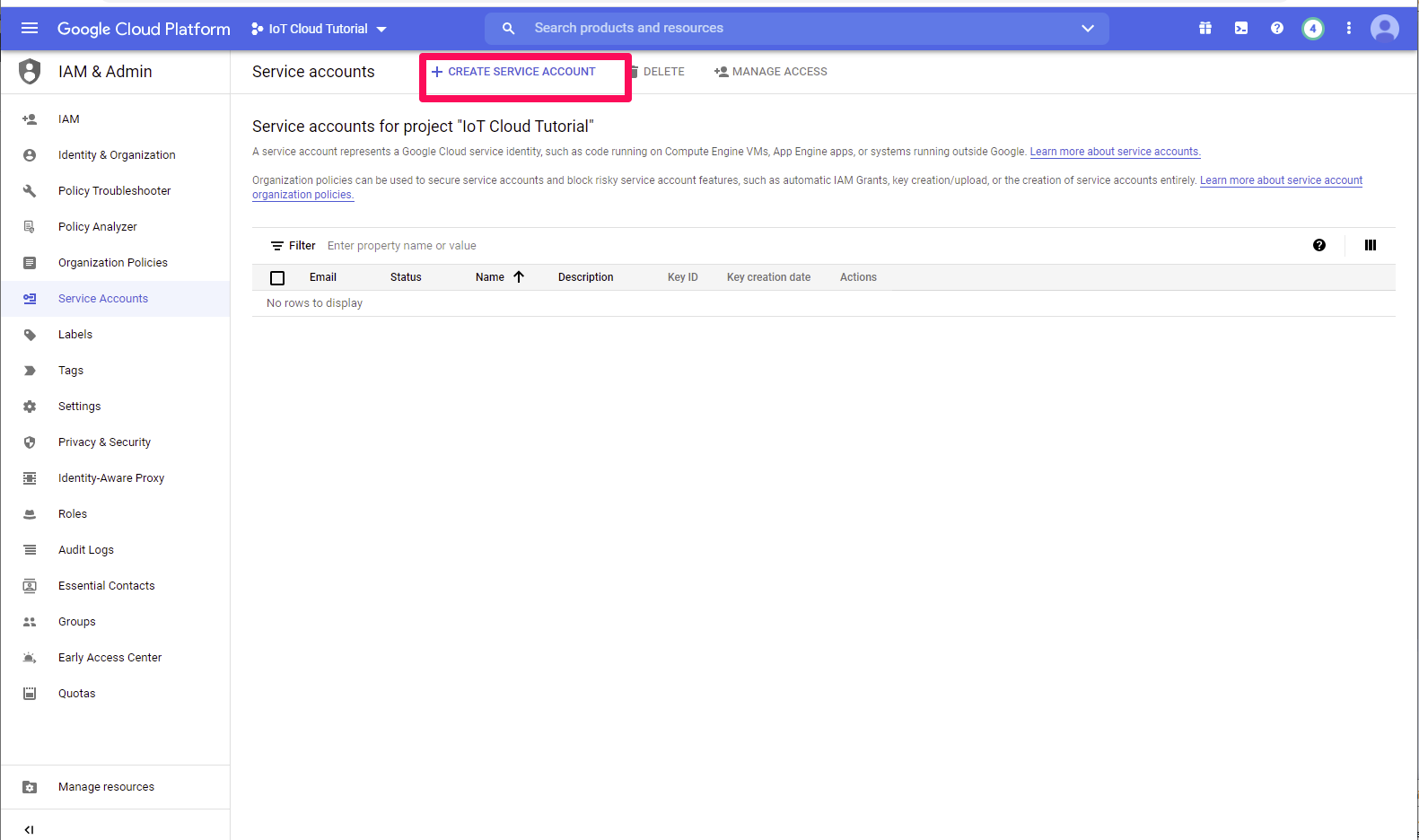

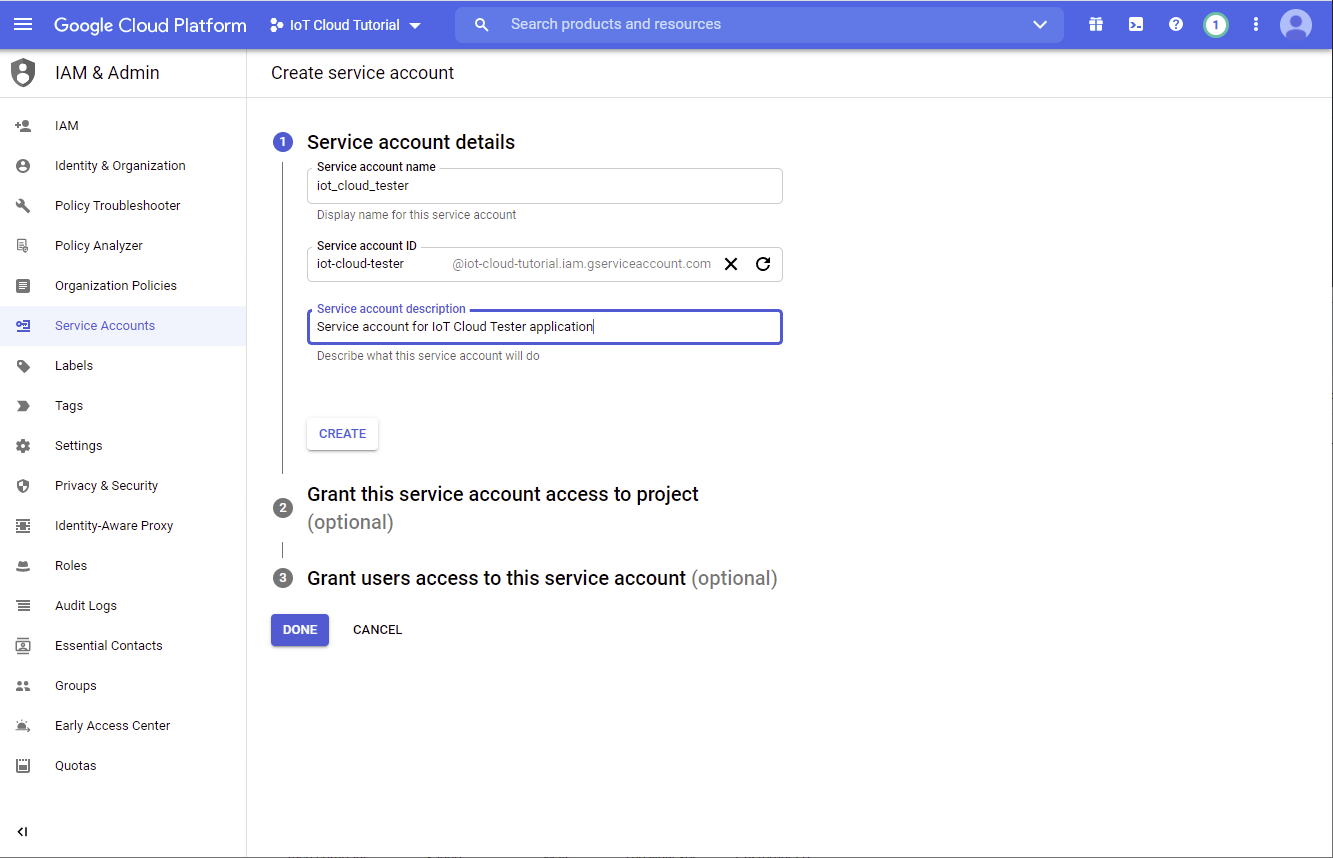

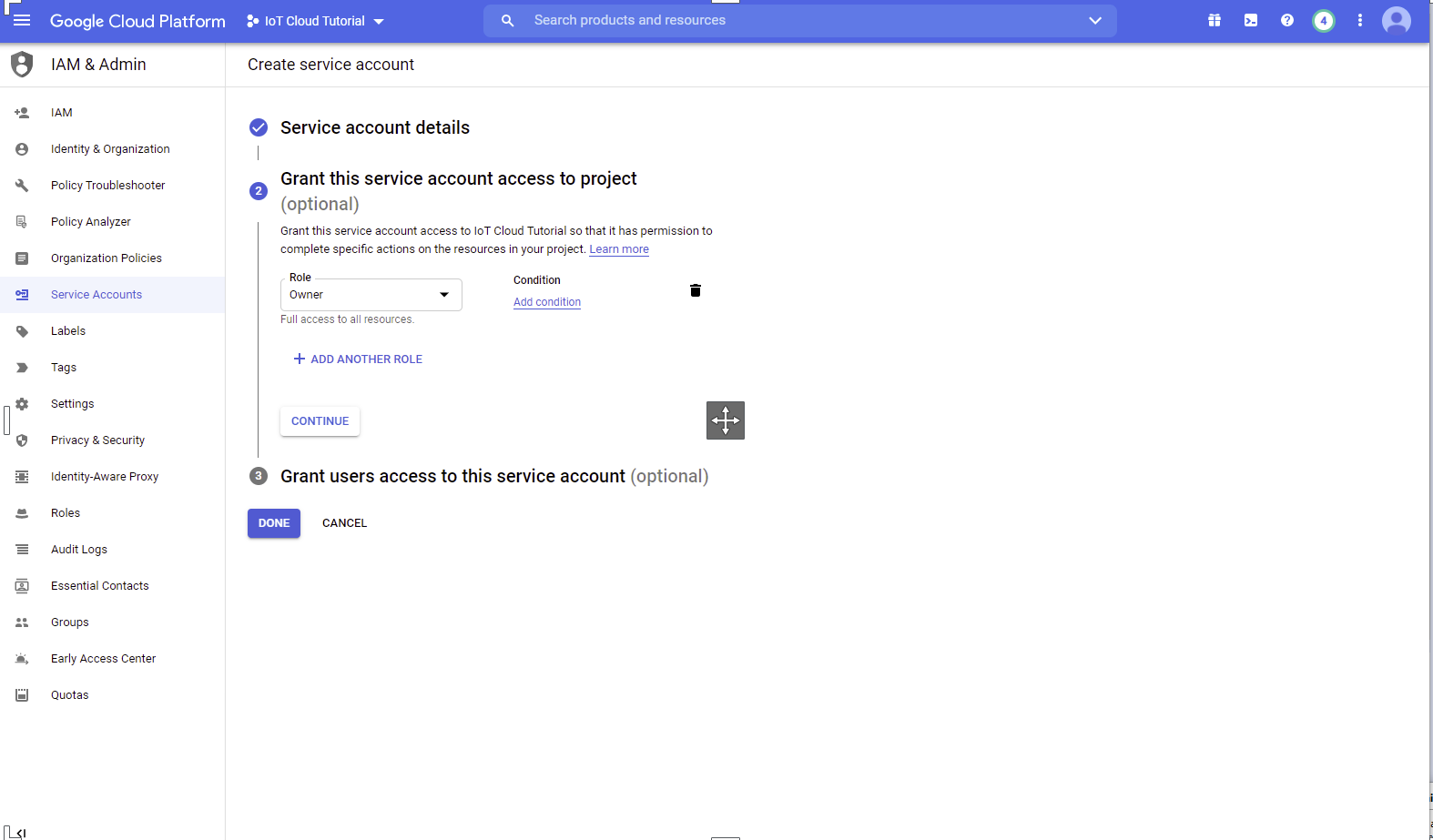

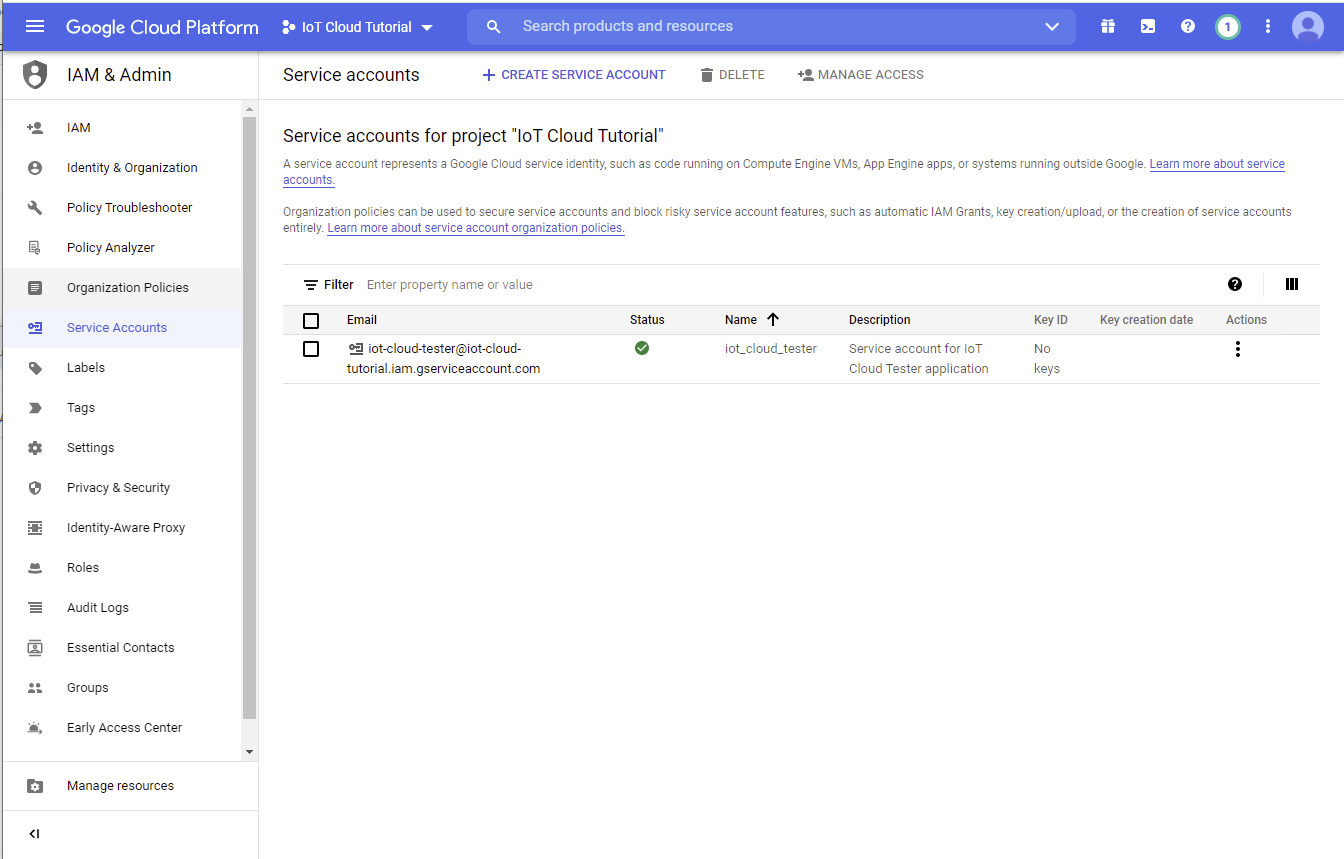

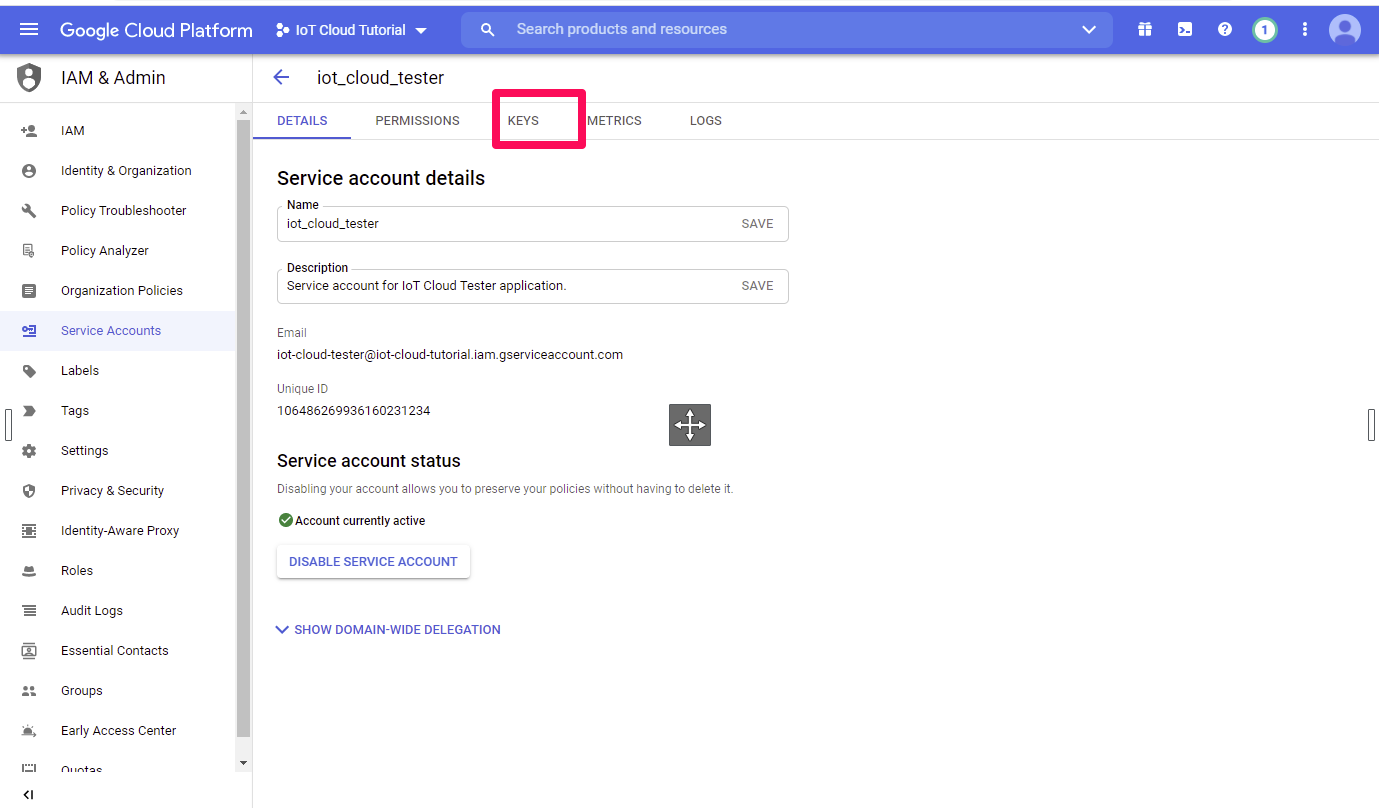

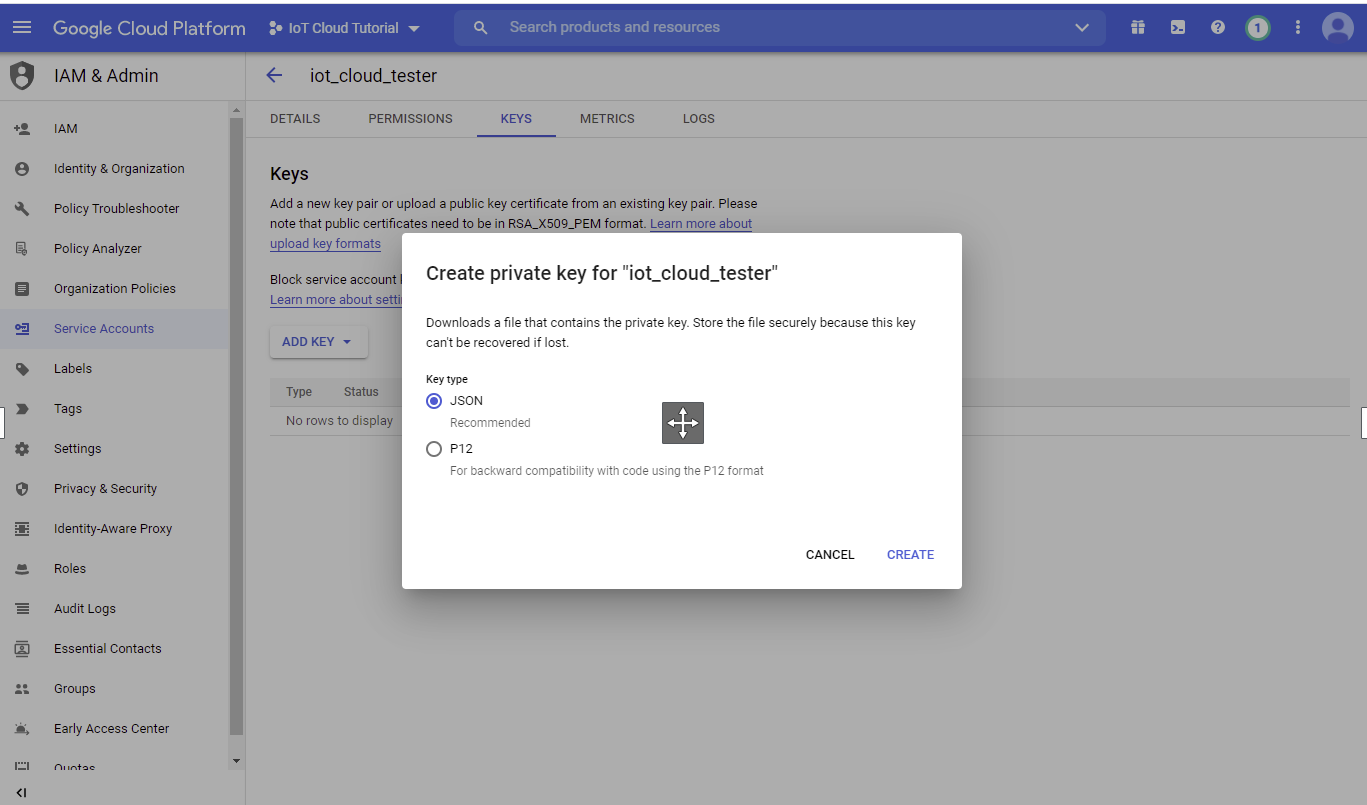

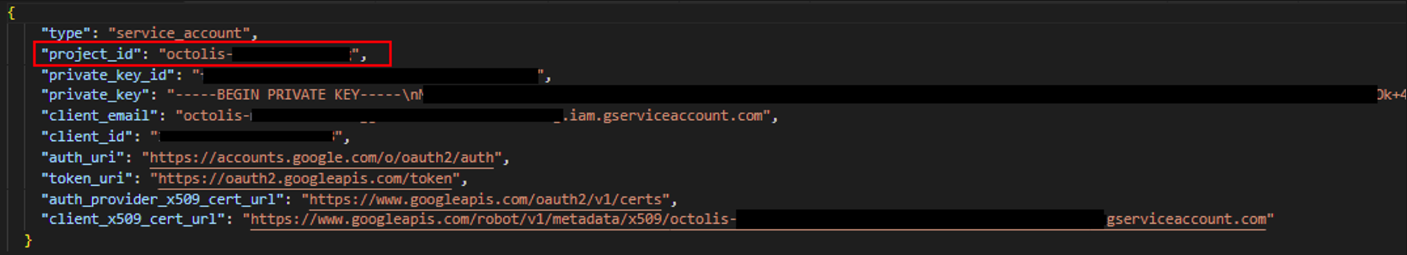

You will need a service account key with permissions to access the project from your BigQuery account.

It should be a JSON like object that contains several keys.

How to get a service account key?

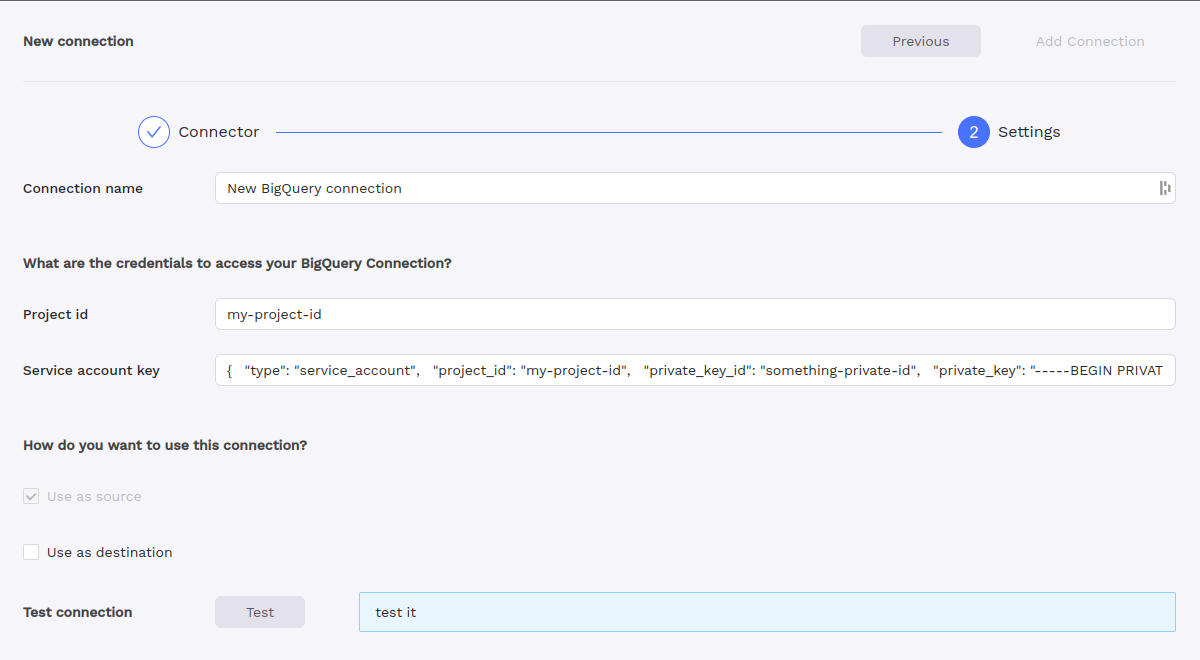

Create Octolis BigQuery connection

To create a BigQuery connection, navigate to Connections page and add a source/destination BigQuery connection.

Copy paste the content of the file in the field “Service account key”.

You can find the Project id in the file under the key

project_id.

Import (using as a source)

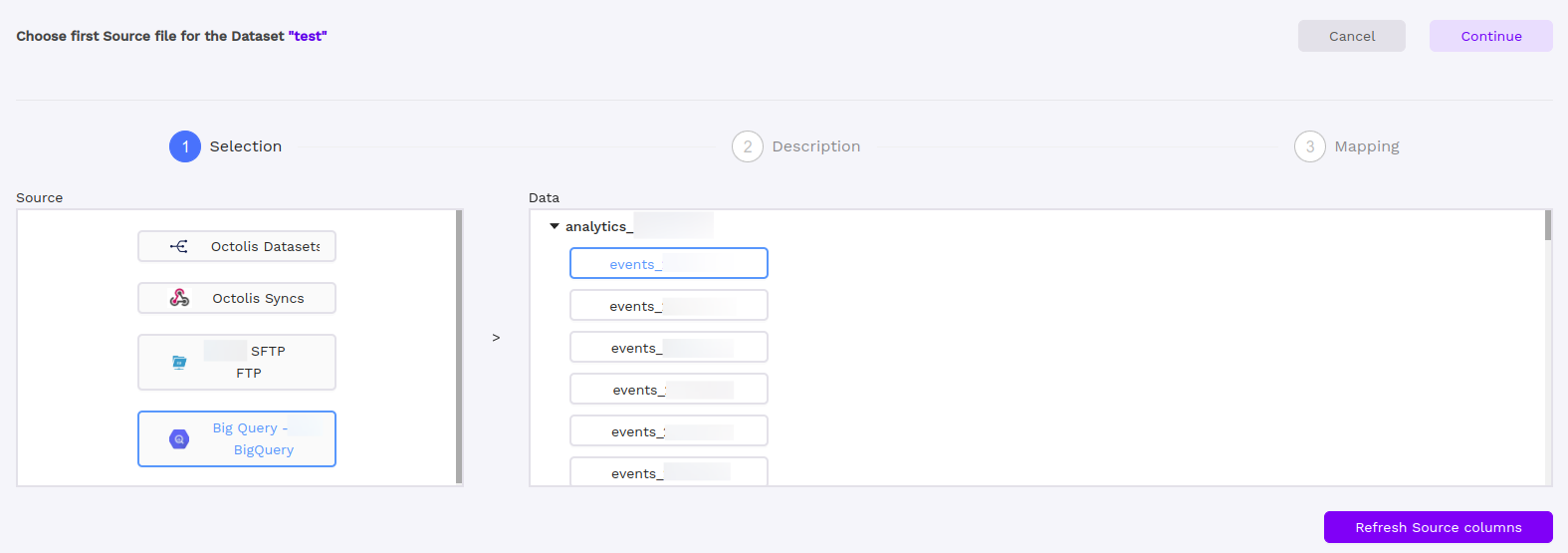

BigQuery dataset tables can be imported in Octolis by using Octolis BigQuery connection as source of a dataset whether by creating a new dataset or adding a new source to an existing one.

In Data section of Selection step, you can find a list of datasets and choose from dataset tables.

Import multiple tables using a wildcard table

Coming soon

Records filtering

Coming soon

Configuration

Hint: If you don’t really have a specific field to uniquely identifies record in your dataset table, you can use a combination of keys.

Syncing (using as a destination)

Field mapping

You can sync any column from your dataset source to BigQuery dataset table.

Destination table

The connector can synchronize data to an existing dataset table or you can choose to create a new dataset table and specify a table name that does not yet exist. When choosing an existing table as the destination, ensure that it matches the structure of the fields you intend to synchronize. Also, make sure the selected destination table is empty to prevent data loss.